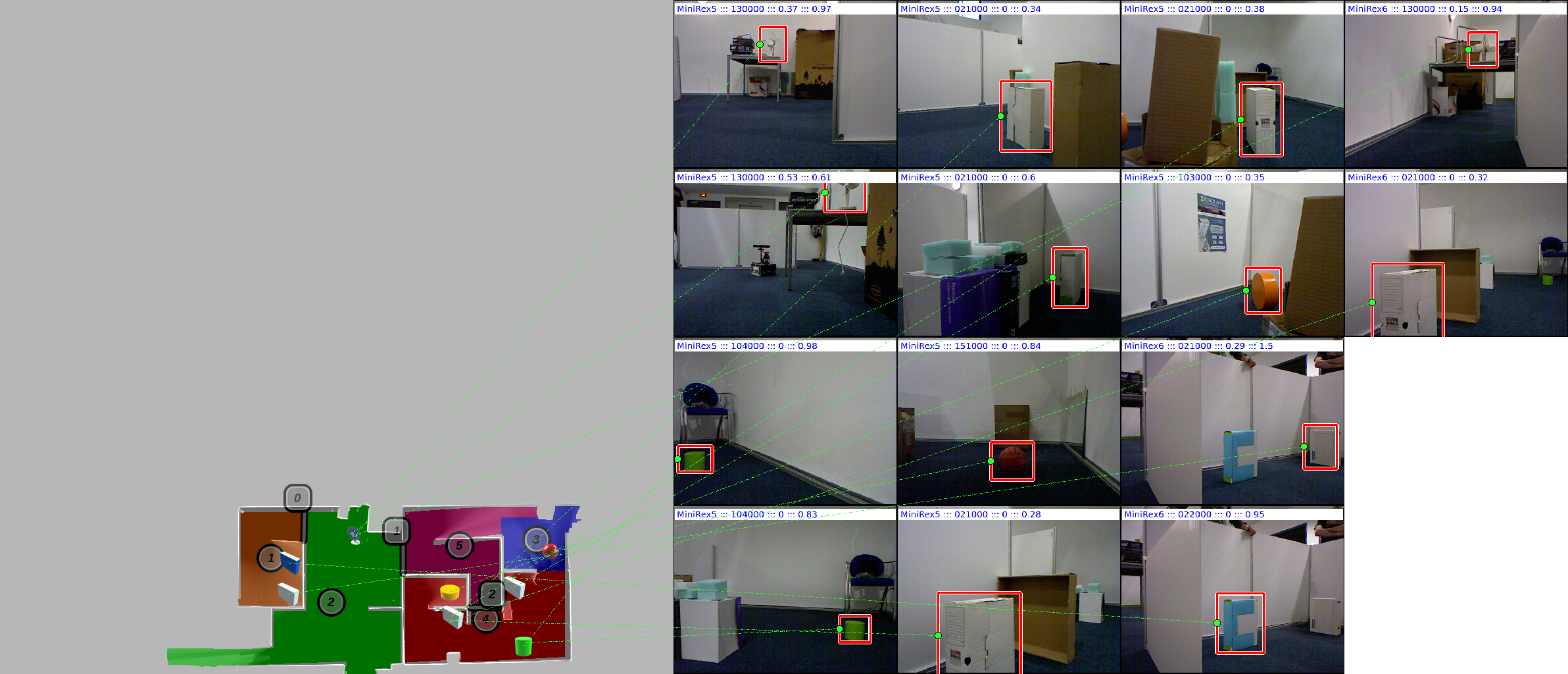

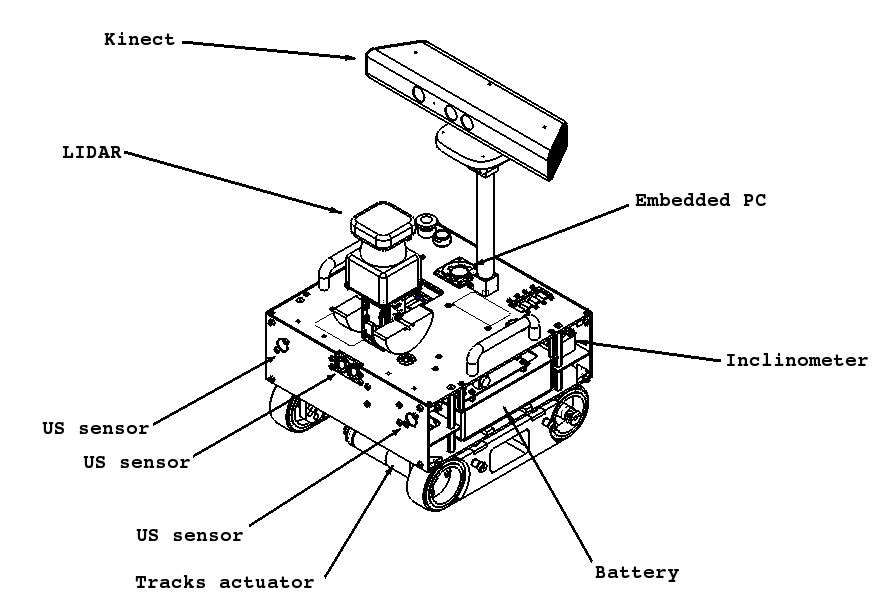

The last (but not least!) scientific issue is object recognition: the robots have to locate and identify objects in the environment (fan, bottles, chairs, plants ...). A Microsoft Kinect sensor is mounted on each robot. While navigating and exploring the environment, the robots perfom 3D captures. These captures are analysed to locate objects. The first step of object recognition is based on a region growing on the depth map (from the Kinect). Each candidate is processed and feature parameters are computed. The parameters are compared with a pre-processed objects database and a classifier selects the closest object in the search space. A new classifier, named Class-O-matic, has been proposed; this classifier isolates and keeps only the essential points from training. Some illustrations of the classifier's performance can be seen in the following figures.

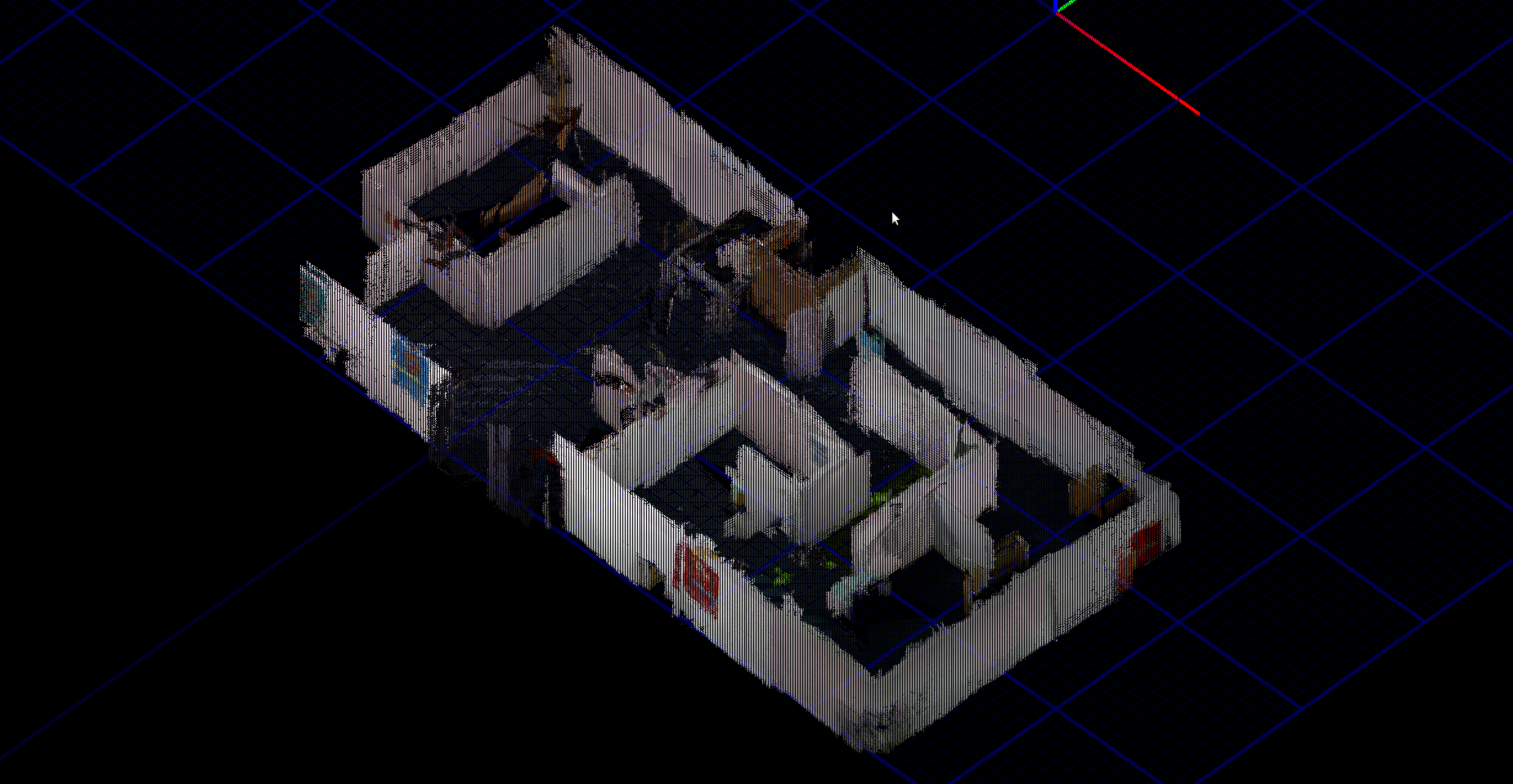

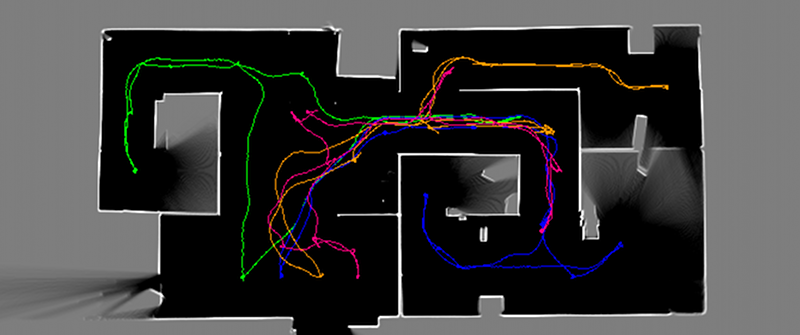

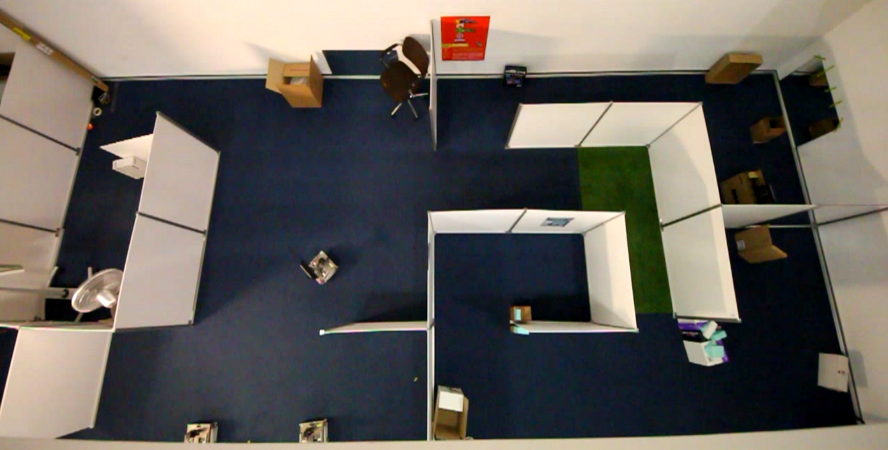

At the end of the mission, the data from each robot is centralized and a single map is created. Rooms of the environment are extracted and the results are gathered into a single file as illustrated in the following figure. All the captures are collated and integrated to create a single 3D map.

| |

P. Lucidarme and S. Lagrange (2011) Slam-O-matic : SLAM algorithm based on global search of local minima , FR1155625 filed on June 24, 2011. |

| |

R. Guyonneau, S. Lagrange, L. Hardouin and P. Lucidarme (2011) Interval analysis for kidnapping problem using range sensors, SWIM 2011. |

| |

R. Guyonneau, S. Lagrange and L. Hardouin (2012) Mobile robots pose tracking: a set-membership approach using a visibility information , ICINCO 2012. |

| |

R. Guyonneau, S. Lagrange and L. Hardouin and P. Lucidarme (2012) The kidnapping problem of mobile robots : a set membership approach , CAR 2012. |

| |

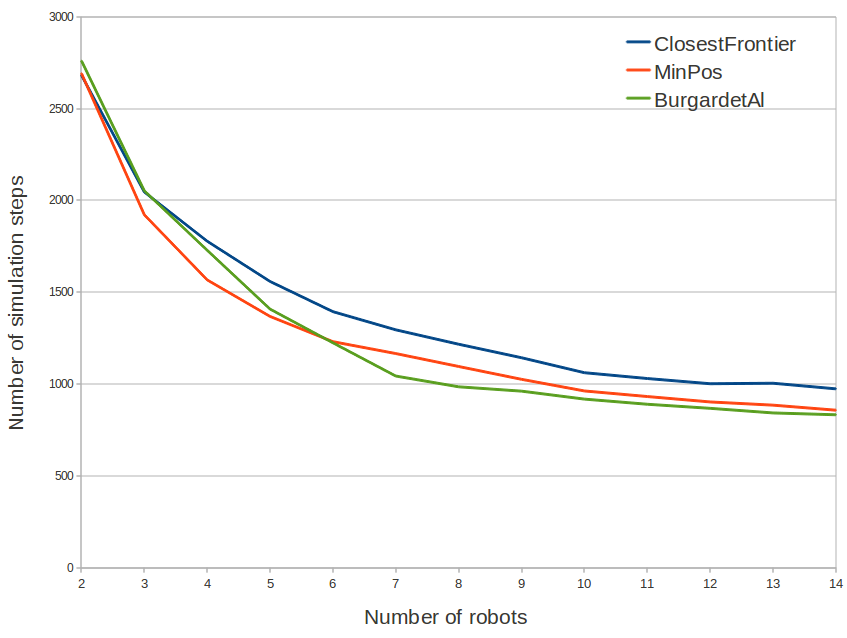

A. Bautin, O. Simonin and F. Charpillet (2011) Towards a communication free coordination for multi-robot exploration , CAR 2011. |

| |

A. Bautin, O. Simonin and F. Charpillet (2011) Stratégie d'exploration multi-robot fondée sur les champs de potentiels artificiels , JFSMA 2011, Extended version pre-selected for RIA 2012 (Revue d'Intelligence Artificielle). |

|

Cart-O-matic

Cart-O-matic

B.

B.